Blog by Sumana Harihareswara, Changeset founder

What Should We Stop Doing? (FLOSS Community Metrics Meeting keynote)

Hi, reader. I wrote this in 2016 and it's now more than five years old. So it may be very out of date; the world, and I, have changed a lot since I wrote it! I'm keeping this up for historical archive purposes, but the me of today may 100% disagree with what I said then. I rarely edit posts after publishing them, but if I do, I usually leave a note in italics to mark the edit and the reason. If this post is particularly offensive or breaches someone's privacy, please contact me.

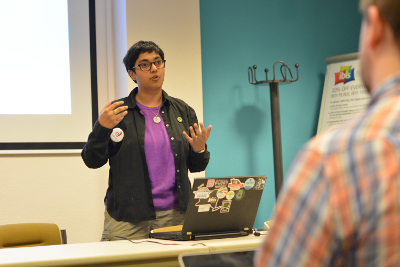

"What should we stop doing?": written version of a keynote address by Sumana Harihareswara, delivered at the FLOSS Community Metrics Meeting just before FOSDEM, 29 January 2016 in Brussels, Belgium. Slide deck is a 14-page PDF. Video is available. The notes I used when I delivered the talk were quite skeletal, so the talk I delivered varied substantially on the sentence level, but covered all the same points.

I'd like to start with a story, about my excellent boss I worked for when I was at the Wikimedia Foundation, Rob Lanphier, and what he told me when I'd been on the job about eight months. In one of our one-on-one meetings, I mentioned to him that I felt overwhelmed. And first, he told me that I'd been on the job less than a year, and it takes a year to ramp up fully in that job, so I shouldn't be too worried. And then he reminded me that we were in an amazing position, that we would hear and get all kinds of great ideas, but that in order to get anything done, we would have to focus. We'd have to learn to say, "That's a great idea, and we're not doing it." And say it often. And, he reminded me, I felt overwhelmed because I actually had the power to make choices, about what I did with my time, that would affect a lot of people. I was not just cog # 15,000 doing a super specialized task at Apple.

I'd like to start with a story, about my excellent boss I worked for when I was at the Wikimedia Foundation, Rob Lanphier, and what he told me when I'd been on the job about eight months. In one of our one-on-one meetings, I mentioned to him that I felt overwhelmed. And first, he told me that I'd been on the job less than a year, and it takes a year to ramp up fully in that job, so I shouldn't be too worried. And then he reminded me that we were in an amazing position, that we would hear and get all kinds of great ideas, but that in order to get anything done, we would have to focus. We'd have to learn to say, "That's a great idea, and we're not doing it." And say it often. And, he reminded me, I felt overwhelmed because I actually had the power to make choices, about what I did with my time, that would affect a lot of people. I was not just cog # 15,000 doing a super specialized task at Apple.

So today I want to talk with you about how to use the power you have, in your open source projects and organizations, and about saying no to a lot of things, so you can focus on doing fewer things well -- the Unix philosophy, right? I'll talk about a few tools and leave you with some questions.

Tool 1: Remember to say no to the lamppost fallacy

The lamppost fallacy is an old one, and the story goes that a drunk guy says, "I dropped my keys, will you help me look for them?" "OK, sure. Where'd you drop them?" "Under that tree." "So why are you looking for them under this lamppost?" "Well, the light is better here."

A. Quantitative vs qualitative in the dev data

The first place we ought to check for the lamppost fallacy is in overvaluing quantitative metrics over qualitative analysis when looking at developer workflow and experience. Dave Neary said, in the FLOSSMetrics meeting in 2014, in "What you measure is what you get. Stories of metrics gone wrong": Use qualitative and quantitative analysis to interpret metrics.

When it comes to developer experience, you can be analytical while both quantitative and qualitative. And you rather have to be, because as soon as you start uncovering numbers, you start asking why they are what they are and what could be done to change that, and that's where the qualitative analytical approach comes in.

Qualitative is still analytical! Camille Fournier's post, "Qualitative or quantitative but always analytical", goes into this:

qualitative is still analytical. You may not be able to use data-driven reasoning because you're starting something new, and there are no numbers. It is hard to do quantitative analysis without data, and new things only have secondary data about potential and markets, they do not have primary data about the actual user engagement with the unbuilt product that you can measure. Furthermore, even when the thing is released, you probably have nothing but "small" data for a while. If you only have a thousand people engaging with something, it is hard to do interesting and statistically significant A/B tests unless you change things drastically and cause massive behavioral changes.

This is applicable to developer experience as well!

For help, I recommend the Wikimedia movement's Grants Evaluation & Learning team's table discussing quantitative and qualitative approaches you can take: ethnography, case studies, participant observation, and so on. To deepen understanding. It's complementary with the quantitative side, which is about generalizing findings.

B. Quantifiable dev artifacts-and-process data versus data about everything else

Another place to check for the lamppost fallacy is in overvaluing quantifiable data about programming artifacts and process over all sorts of data about everything else that matters about your project. Earlier today, Jesus González-Barahona mentioned the many communities -- dev, contributor, user, larger ecosystem -- that you might want to research. There's lots of easily quantifiable data about development, yes, but what is actually important to your project? Dev, user, sysadmin, larger ecology -- all of these might be, honestly, more important to the success of your mission. And we also know some things about how to get better at getting user data.

For help, I recommend the Simply Secure guides on doing qualitative UX research, such as seeing how users are using your product/application. And I recommend you read existing research on software engineering, like the findings in Making Software: What Really Works and Why We Believe It, the O'Reilly book edited by Andy Oram and Greg Wilson.

Tool 2: know what kind of assessment you're trying to do and how it plays into your theory of change

Another really important tool that will help you say no to some things and yes to others is knowing what kind of assessment you're trying to make, and how that plays into your hypothesis, your theory of change.

I'm going to mess this up compared to a serious education researcher, but it's worth knowing the basics of the difference between formative and summative assessments.

Formative assessment or evaluation is diagnostic, and you should use it iteratively to make better decisions to help students learn with better instruction & processes.

Summative assessment is checking outcomes at the conclusion of an exercise or a course, often for accountability, and judging the worth/value of that educational intervention. In our context as open source community managers, this often means that this data is used to persuade bosses & community that we're doing a good job or that someone else is doing a bad job.

As Dawn Foster last year said in her "Your Metrics Strategy" speech at the FLOSSMetrics meeting:

METRICS ARE USEFUL Measure progress, spot trends and recognize contributors.

Start with goals: WHY FOCUS ON GOALS? Avoid a mess: measure the right things, encourage good behavior.

Here's Ioana Chiorean, FLOSS Community Metrics meeting, January 30th 2015, "How metrics motivate":

Measure the right things... specific goals that will contribute to your organization's success

Dave Neary in 2014 in "What you measure is what you get. Stories of metrics gone wrong" at the Metrics meeting said:

be careful what you measure: metrics create incentives

Focus on business and community's success measurements

And this is tough. Because it can be hard to really make a space for truly formative assessment, especially if you are doing everything transparently, because as soon as you gather and publish any data, people will use it to argue that we ought to make drastic changes, not just iterative changes. But it might help to remember what you are truly aiming at, what kind of evaluation you really mean to be doing.

And it helps a lot to know your Theory of Change. You have an assessment of the way the world is, a vision of how you want the world to look, and a hypothesis about some change you could make, an activity or intervention you could perform to move us closer from A to B.

There's a chicken and egg problem here. How do you form the hypothesis without doing some initial measurement? And my perhaps subversive answer is, use ideas from other communities and research to create a hypothesis, and then set up some experiments to check it. Or go with your gut, your instinct about what the hypothesis is, and be ready to discard it if the data does not bear it out.

For help: Check out educational psychology, such as cognitive apprenticeship theory - Mel Chua's presentation here gives you the basics. You might also check out the Program/Grant Learning & Evaluation findings from Wikimedia, and try out how the "pirate metrics" funnel -- Acquisition, Activation, Retention, Referral, Revenue, or AARRR -- fits with your community's needs and bottlenecks.

Tool 3: if something doesn't work, acknowledge it

And the third tool is that when we see data saying that something does not work, we need to have the courage to acknowledge what the data is saying. You can move the goalposts, or you can say no and cause some temporary pain. We have to be willing to take bug reports.

Here's an example. The Wikimedia movement likes to host editathons, where a bunch of people get together and learn to edit Wikipedia together. We hoped that would be a way to train and retain new editors. But Wikipedia editathons don't produce new long-term editors. We learned:

About 52% of participants identified as new users made at least one edit one month after their event, but the percentage editing dropped to 15% in the sixth months after their event

And, in "What we learned from the English Wikipedia new editor pilot in the Philippines":

Inviting contribution by surfacing geo-targeted article stubs was not enough to motivate or help users to make their first edits to an article. Together, all new editors who joined made only six edits in total to the article space during this experiment, and they made no edits to the articles we suggested.Providing suggestions via links to places users might go for help did not appear to sufficiently support or motivate these new editors to get involved. 50 percent of those surveyed later said they didn’t look for help pages. Those who did view help pages nevertheless did not edit the suggested articles.

But over and over in the Wikimedia movement I see that we keep hosting those one-off editathons. And they do work to, for instance, add new high-quality content about the topics they focus on, and some people really like them as parties and morale boosters, and I've heard the argument that they at least get a lot of people through that first step, of creating an account and making their first edit. But that does not mean that they're things we should be spending time on, to reverse the editor decline trend. We need to be honest about that.

It can be hard to give up things we like doing, things we think are good ideas and that ought to work. As an example: I am very much in favor of mentorship and apprenticeship programs in open source, like Google Summer of Code and Outreachy. Recently some researchers, Adriaan Labuschagne and Reid Holmes, raised questions about mentorship programs in "Do Onboarding Programs Work?", published in 2015, about whether these kinds of mentorship programs move the needle enough in the long run, to bring new contributors in. It's not conclusive, but there are questions. And I need to pay attention to that kind of research and be willing to change my recommendations based on what actually works.

We can run into cognitive dissonance if we realize that we did something that wasn't actually effective. Why did I do this thing? why did we do this thing? There's an urge to rationalize it. The Wikimedia FailFest & Learning Pattern hackathon 2015 recommends that we try framing our stories about our past mistakes to avoid that temptation.

Big 'F' failure framing:

- We planned this thing: __________________________

- This is how we knew it wasn't working: __________________________

- There might have been some issues with our assumption that: __________________________

- If we tried it again, we might change: __________________________

Little 'f' failure framing:

- We planned this thing: __________________________

- This is how we knew it wasn't working: __________________________

- We think that this went wrong: __________________________

- Here is how to fix it: __________________________

For help with this tool, I suggest reading existing research evaluating what works in FLOSS and open culture, like "Measuring Engagement: Recommendations from Audit and Analytics" by David Eaves, Adam Lofting, Pierros Papadeas, Peter Loewen of Mozilla.

Priorities

I have a much larger question to leave you with.

One trend I see underlying a big chunk of FLOSS metrics work is the desire to automate the emotional labor involved in maintainership, like figuring out how our fellow contributors are doing, making choices about where to spend mentorship time, and tracking a community's emotional tenor. But is that appropriate? What if we switched our assumptions around and used our metrics to figure out what we're spending time on more generally, and tried to find low-value programming work we could stop doing? What tools would support this, and what scenarios could play out?

This is a huge question and I have barely scratched the surface, but I would love to hear your thoughts. Thank you.

Sumana Harihareswara, Changeset Consulting